import duckdb

import geopandas as gpdHaving read about DuckDB’s spatial extension, I wanted to check if I could integrate DuckDB into my workflow. I primarily use GeoPandas for my spatial tasks within the Python ecosystem. So in this notebook, I will test how easy it is to integrate DuckDB and GeoPandas.

Installing DuckDB

Installing DuckDB for python is as simple as

pip install duckdbBut I have also installed it locally for the console. See here for installation instructions.

Jupyter Integration

We will use the JupySQL extension for JupyterLab to make using SQL easier with JupyterLab. This allows us to use the %sql or %%sql cell magics. With %sql, whatever follows it will be executed as a SQL command. With %%sql, the entire cell is treated as SQL.

%load_ext sql

con = duckdb.connect()

%sql con --alias duckdbWe will use the spatial extension of duckdb along with the parquet extension for reading/writing parquet files. Installing these extensions is as easy as:

%%sql

INSTALL spatial;

INSTALL parquet;| Success |

|---|

%%sql

LOAD spatial;

LOAD parquet;| Success |

|---|

Now we can read the parquet file.

Reading Data with DuckDB

I will use the NSW landuse dataset for this notebook, which is available here. This dataset captures how the landscape in NSW is being used for food prodcution, forestry, nature conservation, infrastructure and urban development. I have downloaded the shapefile for this dataset, which is 1.5G and has >909k rows.

%%sql

CREATE TABLE nsw_landuse AS SELECT * FROM ST_READ('./NSWLanduse2017v1p2/NSWLanduse2017v1p2.shx');| Count |

|---|

| 909213 |

%sql DESCRIBE nsw_landuse;| column_name | column_type | null | key | default | extra |

|---|---|---|---|---|---|

| Area_Ha | DOUBLE | YES | None | None | None |

| SHAPE_Leng | DOUBLE | YES | None | None | None |

| SHAPE_Area | DOUBLE | YES | None | None | None |

| Secondary | VARCHAR | YES | None | None | None |

| Tertiary | VARCHAR | YES | None | None | None |

| Commodity | VARCHAR | YES | None | None | None |

| Note | VARCHAR | YES | None | None | None |

| geom | GEOMETRY | YES | None | None | None |

Lets compare this to reading with GeoPandas

gdf = gpd.read_file('./NSWLanduse2017v1p2/NSWLanduse2017v1p2.shp')So, while DuckDB can read the file faster than GeoPandas, its not a significant improvement (11s vs 14s). And at this point, we’ve not even converted to a pandas object, which I’ll need to do to integrate with my python workflow.

df = con.sql("SELECT * FROM nsw_landuse").df()Reading Subset of the Data

I’m interested in only the geometry and Secondary columns. Lets see if this is any different.

del df

del gdf

%sql DROP TABLE IF EXISTS nsw_landuse;| Success |

|---|

df = con.sql("""

SELECT Secondary, geom FROM ST_READ('./NSWLanduse2017v1p2/NSWLanduse2017v1p2.shx')

""").df()One thing to note here is that this is not yet a GeoDataFrame, but a pandas DataFrame, which will need to be converted to a GeoDataFrame. Lets do that now.

df = gpd.GeoDataFrame(df, geometry='geom')--------------------------------------------------------------------------- TypeError Traceback (most recent call last) Cell In[11], line 1 ----> 1 df = gpd.GeoDataFrame(df, geometry='geom') File ~/learning/data-science/.venv/lib/python3.11/site-packages/geopandas/geodataframe.py:209, in GeoDataFrame.__init__(self, data, geometry, crs, *args, **kwargs) 204 if hasattr(geometry, "name") and geometry.name not in ("geometry", None): 205 # __init__ always creates geometry col named "geometry" 206 # rename as `set_geometry` respects the given series name 207 geometry = geometry.rename("geometry") --> 209 self.set_geometry(geometry, inplace=True, crs=crs) 211 if geometry is None and crs: 212 raise ValueError( 213 "Assigning CRS to a GeoDataFrame without a geometry column is not " 214 "supported. Supply geometry using the 'geometry=' keyword argument, " 215 "or by providing a DataFrame with column name 'geometry'", 216 ) File ~/learning/data-science/.venv/lib/python3.11/site-packages/geopandas/geodataframe.py:404, in GeoDataFrame.set_geometry(self, col, drop, inplace, crs) 401 crs = getattr(level, "crs", None) 403 # Check that we are using a listlike of geometries --> 404 level = _ensure_geometry(level, crs=crs) 405 # ensure_geometry only sets crs on level if it has crs==None 406 if isinstance(level, GeoSeries): File ~/learning/data-science/.venv/lib/python3.11/site-packages/geopandas/geodataframe.py:63, in _ensure_geometry(data, crs) 61 else: 62 if isinstance(data, Series): ---> 63 out = from_shapely(np.asarray(data), crs=crs) 64 return GeoSeries(out, index=data.index, name=data.name) 65 else: File ~/learning/data-science/.venv/lib/python3.11/site-packages/geopandas/array.py:179, in from_shapely(data, crs) 177 out.append(None) 178 else: --> 179 raise TypeError( 180 "Input must be valid geometry objects: {0}".format(geom) 181 ) 182 arr = np.array(out, dtype=object) 184 return GeometryArray(arr, crs=crs) TypeError: Input must be valid geometry objects: bytearray(b"\x02\x04\x00\x00\x00\x00\x00\x00\x9d^\x0eCG\x02\x05\xc2\xe7`\x0eC\xb7\xfe\x04\xc2\x02\x00\x00\x00\x01\x00\x00\x00\x13\x00\x00\x00\x00\x00\x00\x00\x94QV\xed\t\xcca@\xe8\x1f\xba\x89\xe2\x9f@\xc0T\xde@\xe1\t\xcca@x\x05x\xf1\x0f\xa0@\xc0p\xda\xef\xfd\x0b\xcca@h;n\xd7\x0f\xa0@\xc0\x0cc*N\x1c\xcca@\x08\xb9\nC\x13\xa0@\xc0\xa0fl\xdb\x1c\xcca@\xe8\x82^\x0b<\xa0@\xc0\xac\x16j}\x1c\xcca@\x08\xc7S\x8c<\xa0@\xc0\x10\xa9\x16\xcf\x08\xcca@\xc8\x80\xaf%:\xa0@\xc0\xb4p6v\t\xcca@\xa0<\x15\xcbH\xa0@\xc0\xa0Q\xa4\x0f\xee\xcba@\xc0\xca\xaa\x00F\xa0@\xc0\xcc\x94gB\xe9\xcba@\xd8\x06}5<\xa0@\xc0`\xcb\x9b+\xe9\xcba@\xf8\xd87l%\xa0@\xc0\x0cvcD\xe9\xcba@\xc8\xb3@X\x19\xa0@\xc0H\x90Z\x93\xd4\xcba@\x00\xed\x9ae\x16\xa0@\xc0|\xdb\xaf\xaf\xd3\xcba@\xc0K\tQ\x16\xa0@\xc0P\xbc1t\xd4\xcba@\xc0\x10|\xe3\xd6\x9f@\xc00\x14\xd1+\xd5\xcba@\xd8l\x9e\xe3\xd6\x9f@\xc0\xfc\x81\x87v\xe9\xcba@\xa8D\x07\'\xd9\x9f@\xc0\x046\xfaz\xe9\xcba@\xc8\x050\x90\xe0\x9f@\xc0\x94QV\xed\t\xcca@\xe8\x1f\xba\x89\xe2\x9f@\xc0")

which gives an error because with DuckDB the resulting dataframe’s geom column is in the bytearray format and cannot be read directly into a GeoPandas GeoDataFrame. The workaround is to ask DuckDB to read it as text (WKT), and then ask GeoPandas to read that.

df = con.sql("""

SELECT Secondary, ST_AsText(geom) AS geometry FROM ST_READ('./NSWLanduse2017v1p2/NSWLanduse2017v1p2.shx')

""").df()df = gpd.GeoDataFrame(df)df['geometry'] = gpd.GeoSeries.from_wkt(df['geometry'])

df = df.set_geometry('geometry')But the from_wkt seems like a very expensive operation (~35s), which makes using DuckDB to read shapefiles for use with GeoPandas not worth it.

gdf = gpd.read_file('./NSWLanduse2017v1p2/NSWLanduse2017v1p2.shp', columns=['Secondary', 'geom'])Parquet Files

Having read the shapefile, I always save it as a parquet file for fast i/o operations later on. Let’s see if there’s any performance difference in reading/writing parquet files with DuckDB and GeoPandas. For this, lets save the gdf from earlier as a parquet file.

gdf.to_parquet('nsw_landuse.parquet')# filter by category and get result as a dataframe

df = con.sql(f"""

SELECT Secondary, ST_AsText(geometry) AS geometry

FROM './NSWLanduse2017v1p2/nsw_landuse.parquet'

WHERE Secondary == '3.3.0 Cropping'

""").df()

gdf = gpd.GeoDataFrame(df)

gdf['geometry'] = gpd.GeoSeries.from_wkt(df['geometry'])

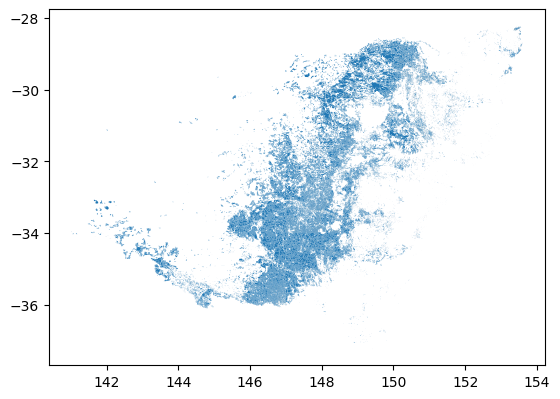

gdf = gdf.set_geometry('geometry')Reading the parquet file and converting to GeoPandas takes ~6s.

GeoPandas’ read_parquet, unfortunately doesn’t support filtering the rows like we did above with DuckDB. And this looks like how I will be using DuckDB until this feature becomes available in GeoPandas. Currently read_parquet only supports filtering columns.